Why did the AI buying assistant start a stand-up comedy career? Because it knows how to find the best "deals" of laughter!

Here is hoping ChatGPT is better at being a buying assistant than it is at writing jokes!

ChatGPT is the hottest topic around the industry and, inspired by a recent tech talk by our CTO, we used our most recent hack day to try and answer some questions about what Kogan.com could use ChatGPT for. Can we train ChatGPT with knowledge of our products so that it can be a buying assistant to our customers? Can we train it with our FAQs and customer support information so that it can help answer customer queries more efficiently? Through a few different experiments, we tried to answer these questions.

So what is ChatGPT?

ChatGPT is an advanced artificial intelligence language model developed by OpenAI that specialises in understanding and generating human-like text. It works by analysing large amounts of text data and predicting the most likely responses or content based on the context provided, leading to highly accurate and coherent interactions.

Where to begin?

The ChatGPT team on our hack day was the largest and involved a good mixture of different types of developers. We split into two main segments - our backend developers focused on training the model with our data and creating an API that could be interacted with. Our mobile developers focused on adding a chat feature to our current app, which would interact with the created API.

Implementation

Training the model

Starting out we investigated which language model would be the best fit for our application. Well-known models like GPT 3.5/4 are best suited for precise answers whereas models like Davinci are better at extended, concise responses. As this was a support-type bot with a general audience, we went with Davinci for a better user experience.

The process of training the bot involved creating a GPT vector index containing all our data using the OpenAI language model we’ve selected. We used the Python lib, LlamaIndex to facilitate this process. We were then able to query our GPT vector index with our questions.

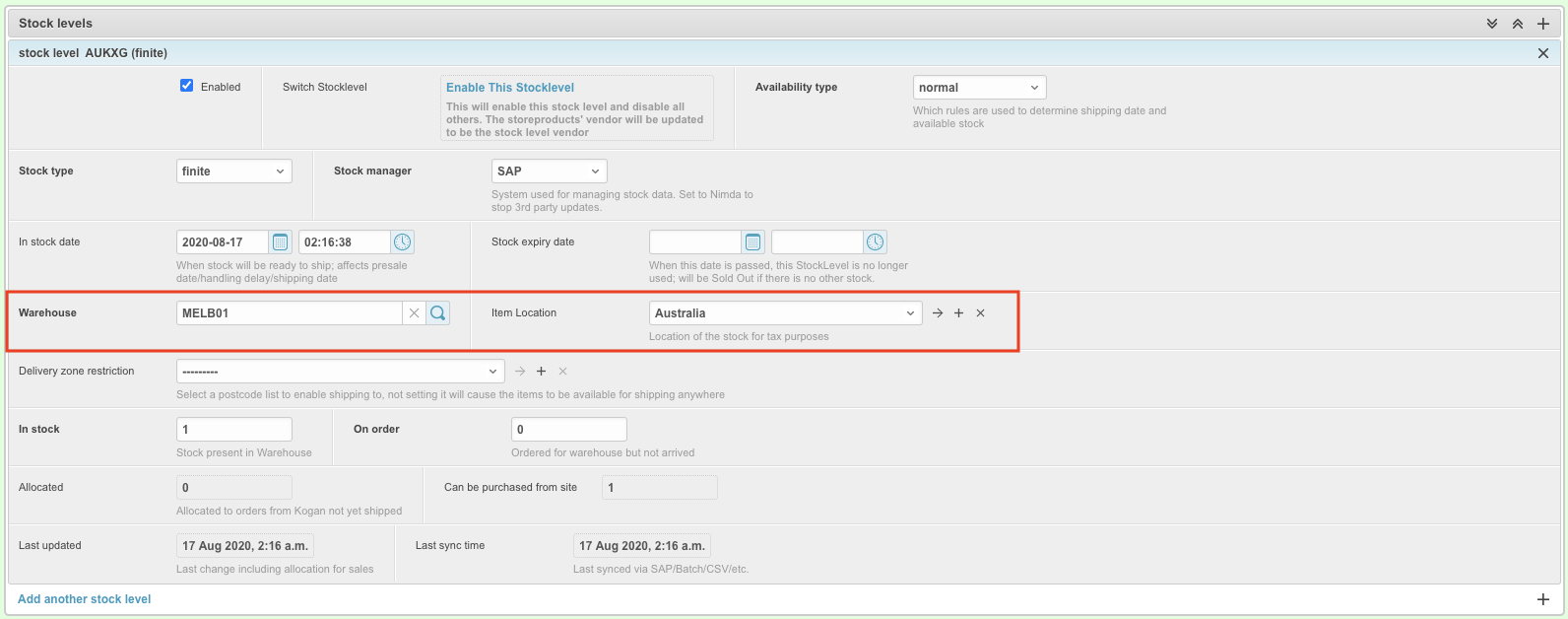

We trained one of the models using product descriptions and product manuals and the second included customer support FAQs and a collection of fake customer support tickets to see if we could train positive interactions into the model.

So, with our models in hand, we jumped in with some questions!!

Immediately we found that general information from our customer interactions with the support team, such as customer name, was being used by the model to assume the user’s name etc. Questions like,

“How can I get a refund on my order”,

Would often come back with responses like,

“Sure

The security/privacy implications of a model being trained with conversations were becoming obvious and seemed like a bigger problem for another day, so we had to park the customer support branch of this project for the day.

We focused on the product assistant for the rest of the day, which turned out to be a great decision as we got some great results.

API Setup

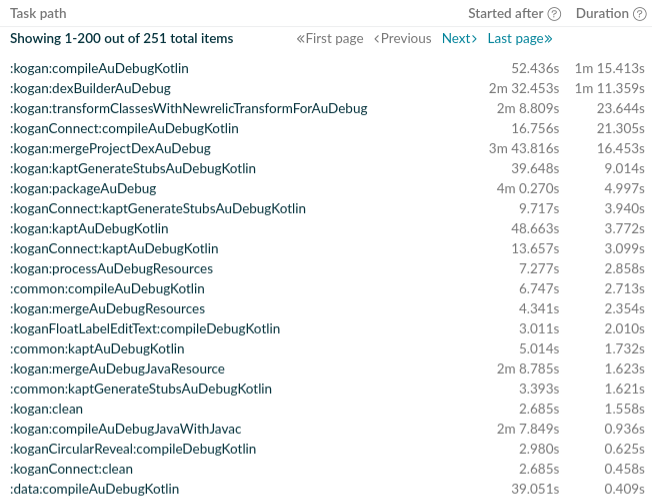

In addition to building the model, we also needed to host it somewhere to make it available for the mobile app to consume. Initially, we started building out a lambda function behind an API gateway in AWS to host the application, but in the final hour of our hack day realised that the model wouldn’t fit into the lambda function’s available space, so we quickly pivoted by spinning up an EC2 instance using AWS Lightsail and setting up Flask as our API. It was great to see the team mitigate issues as they popped up like this.

Solution

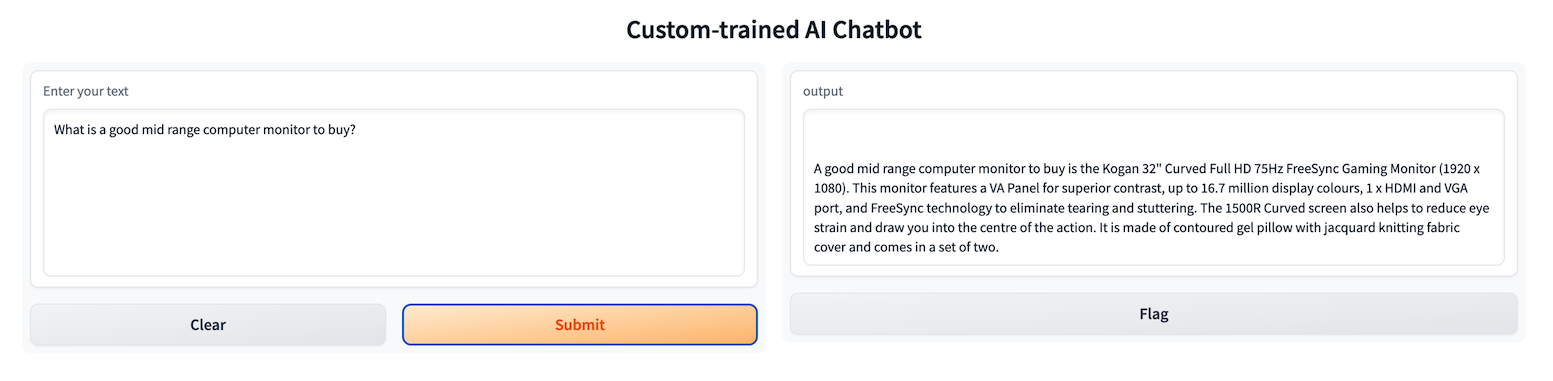

At the end of the day, the team was able to showcase a working prototype, with our mobile app integrating with the API and returning some interesting responses. The most promising response we got was a question related to safety information contained within one of our product guides.

Q: “Can I use the Estelle Hair dryer in the bathroom?”

A: “No, you should not use the Estelle Hair Dryer in the bathroom. According to the safety warnings, it should not be used in the bath or shower, near a bathtub, washbasin or other products that contain water, and should be unplugged after use and the speed and heat settings should be set as required before use if it is being used in the bathroom.”

Another example of a general question was:

Q: “What options are available in the Kogan washing machine?”

A: ” The Kogan 7kg Top Load Washer offers a range of options, including 7kg clothes capacity, 6 wash programs and memory function, 8 water levels and 9 types of water power, LED display and delay start function, fuzzy logic control and detergent dissolve tech, child safety lock feature, and a drain pump filter to avoid blockages. It is made from high-quality, clear ABS material, making it easy to maintain and durable for everyday use. It also features personal preference settings, allowing you to customise your washing experience.”

At times, the model wouldn’t align the correct information with a given product but this was always good for a laugh.

Q: “Which Kogan toaster would best suit my family of 6”

A: “The Kogan 4 Slice Toaster (Silver) would best suit a family of 6. It features two independent browning controls, seven adjustable browning settings, built-in Defrost and Reheat functions, an ultra-sleek silver design, a weight capacity of 50kgs, and a frame material of wood. It also has a removable crumb tray at the bottom for easy cleaning.”

To improve this we could have provided our data in a more structured format, but for this experiment all our data was unformatted.

One of the main problems we discovered was the time it took to get a response from the API. Responding often took 5-10 seconds to come through and would come through as complete responses rather than each word like the ChatGPT client in your browser does. This was just a limitation of the library implementation and setting, so could be mitigated with a more bespoke solution.

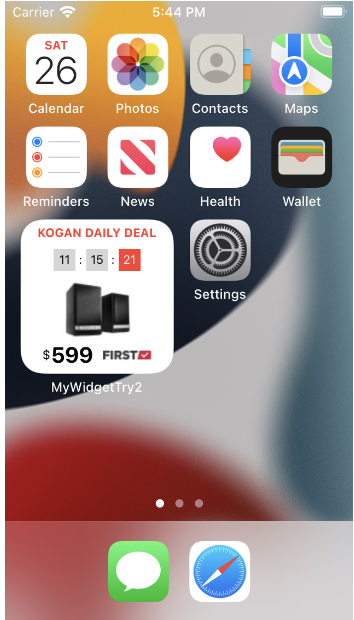

As an added bonus, our mobile developers also took the time to work with SwiftUI, with one even using ChatGPT to assist with this along the way, and we were all impressed at what it was responding with.

Final Thoughts

Overall it was definitely a successful hack day for the ChatGPT team and highlighted the immense potential of the use cases of ChatGPT at Kogan.com. The hack days are a great opportunity to collaborate with other squads within the engineering team which we wouldn’t usually interact with on a daily basis.