At Kogan.com, engineering is about building software that is useful and seeing them used at scale. We work in a fast moving e-commerce environment, so the problems are real and often complex. Performance, reliability, scale, legacy constraints, new features, tight feedback loops. We ship frequently, deploy daily, and continuously improve what is already live. You can see the impact of your work quickly, and so can our customers.

Yes, there are hackathons, plenty of snacks, meetup pizzas and team events. But what defines the culture is ownership. Engineers are trusted to make decisions, go deep into systems, challenge assumptions, and drive outcomes. That might mean building a new platform, untangling and modernising legacy code, improving observability, or removing bottlenecks that affect millions of users.

Teams are pragmatic and hands on. We care about clean code and good architecture, but we also care about delivering value. There is a strong bias toward action and continuous improvement over perfection. Collaboration is key. Engineers work closely with product, design, data, and commercial teams. Context is shared openly, trade offs are discussed honestly, and ideas are judged on merit. To bring our culture to life, we spoke to three of our engineers about the engineering culture here:

Shams Saaticho

How do ideas go from concept to production here?

Ideas come from stakeholders, marketing, product, UX or engineering. The first step is clarity. What problem are we solving? What measurable outcomes define success? What constraints or trade offs exist?

Engineers and stakeholders align on requirements and break larger initiatives into small, testable increments with clear acceptance criteria. Once scoped, work is prioritised in the backlog.

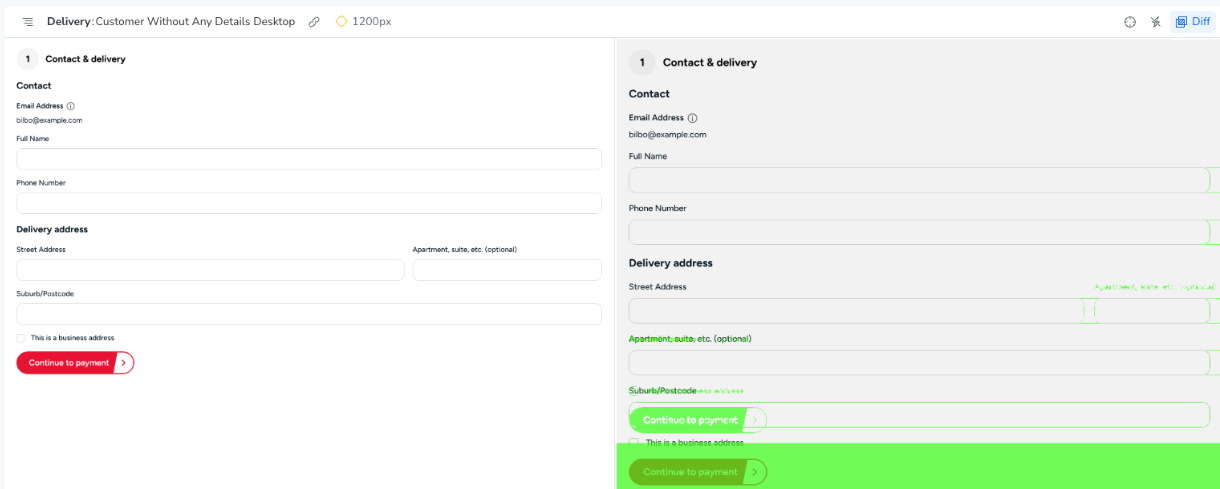

During development, changes go through peer review, automated testing and CI checks, plus user acceptance testing where needed. Deployment happens through CI/CD.

Shipping is not the finish line. We monitor production metrics and behaviour to confirm impact and guide iteration. Low risk improvements can move from idea to production quickly, often within a day

What does ownership mean in practice for engineers?

Ownership is responsibility for a domain built through context and accountability. It means understanding how a system works, why it was designed that way and what trade offs shaped it.

Engineers are expected to recommend approaches within their domain, propose refactors, and flag decisions that introduce long term cost. Ownership is proactive, not reactive.

It does not mean working in isolation. In a large system, changes often cross services, so knowledge is shared and decisions are communicated clearly.

Ownership ultimately means end to end accountability. What is delivered must be reliable, secure, maintainable and scalable, with consideration for how future engineers will extend it.

Ivan Van

How do teams collaborate across Product, Design, Data, and the wider business?

At the heart of it all, we want to build the right product. That means involving the right people in the conversations that shape it. We start by clearly understanding the problem we’re trying to solve. From there, we work closely with tech leadership, product owners, and SMEs, keeping them informed throughout delivery. Once the work is done, we share the outcome with the relevant stakeholders and iterate as we learn how it performs in the real world.

What’s a recent example of the team improving a process or system?

There’s always an idea floating around somewhere here at Kogan. Whether it comes from a lunch conversation, someone sharing a cool article in our eng-tech channel, or a quick mention by the water cooler, ideas for improving things are always in the air. Right now, we’re evaluating git worktrees so developers can have multiple agents working concurrently, making better use of time for people who use agents in their daily development workflow. We also have a hack day coming up, where everyone has the opportunity to deliver quality-of-life and product improvements.

Michael Lisitsa

What does “good engineering” look like at Kogan.com?

We ship work that creates measurable impact for customers and internal stakeholders, not just completed tickets. Decisions are grounded in data and validated in production to ensure solutions perform as expected under real conditions and scale.

We treat code as a long term asset. That means actively reviewing legacy systems, reducing technical debt and modernising where it makes sense. Quality, maintainability and performance are considered upfront, not retrofitted later.

Engineers are expected to contribute across their team’s board and collaborate across teams when required. They are empowered to own the full life cycle of their features, growing their capabilities in front end, back end and infrastructure.

How do teams balance speed with building things sustainably?

Work is broken into small tickets so value reaches customers quickly and changes remain easy to review, test and roll back if needed.

We leverage AI to improve speed and lift code quality, but engineers remain accountable for every decision. It is a productivity tool, not a replacement for judgment.

We apply the Boy Scout Rule consistently. Code is left better than it was found, with small improvements made along the way to patterns, performance and observability instead of deferring cleanup. Several times a year, Ship Frenzy creates space to clear smaller tasks that may not surface through standard prioritisation.

Planning is done just in time. We avoid deep discovery work too early, reducing wasted effort and keeping teams focused on what is ready to be built.