One of the most important things you can do for your development teams' productivity is to shorten the feedback loop during development. This applies to getting feedback from customers or stakeholders to ensure you're building the right thing, as much as it does to testing the code you're writing to ensure no bugs have crept into your change set.

Today we're focusing on the Development - Test feedback loop. Developing a change and running your regression test suite to validate that change should be as fast as possible.

We've used three different systems to run our unit tests over the last few years. Beginning with a self-hosted Jenkins instance, transitioning to Travis CI, and finally arriving at Circle CI.

We have several different applications at Kogan.com, but today I'm only considering the test suite for our biggest application, the one driving the shopping website.

Slow Tests

Our test suite currently clocks in at 3610 tests. A year ago, before we moved to Circle CI, this was probably closer to 3000 tests. And those 3000 tests were taking upwards of 25 minutes to run from start to finish.

25 minutes is not a short feedback loop for a change.

Often, we'd have a change that was passing all tests and was about ready to ship in the daily deployment, but received some last minute feedback that required a small adjustment. That adjustment would be made, but waiting upwards of 25 minutes for that change to validate would mean missing that deploy window, or holding up the deploy while the test suite did its' thing. So it'd just get merged, before the tests had ran, because the changes would be low that a bug had crept in.

We shipped most of our outages in this way.

There came a point where the team gathered and agreed to do what we could to get the test suite running in under 5 minutes. A 5 times improvement is massively ambitious, but we wanted the threshold to be "push your changes, grab a coffee, verify all tests have passed". 5 minutes is enough to do some admin work, write up some docs, check your email, and remain somewhat in context. 25 minutes breaks your context every time.

Faster Tests

There were a few strategies we used to get the runtime of our test suite down, beginning with profiling test durations and aggressively optimising the slowest tests, or deleting them if they had little value.

A few strategies we employed:

- Reduce the amount of test data required for a test

- Eliminating any network calls that should have been isolated

- Trying to reduce or eliminate any SQL queries we could

- Move test data setup to setUpTestData which is class based rather than test basedThe one strategy that really looked interesting was a built in Django feature for running the test suite in parallel.

Runs tests in separate parallel processes. Since modern processors have multiple cores, this allows running tests significantly faster.

But, there is a catch:

Each process gets its own database. You must ensure that different test cases don’t access the same resources. For instance, test cases that touch the filesystem should create a temporary directory for their own use.

While Django will ensure that each parallel process gets its' own isolated database, it does nothing to ensure that any other shared resources are also isolated. We had both Redis and Elasticsearch in our project, and there was no mechanism to ensure that we could isolate those dependencies.

NOTE

Django could assist here in a fairly basic way. When it spins up parallel processes, it could give each process an integer as an environment variable that the application could then use to do its own isolation. Example:

TEST_PROCESS=3

CACHE_URL=f"redis//host:port/{os.environ['TEST_PROCESS']}"Test Splitting

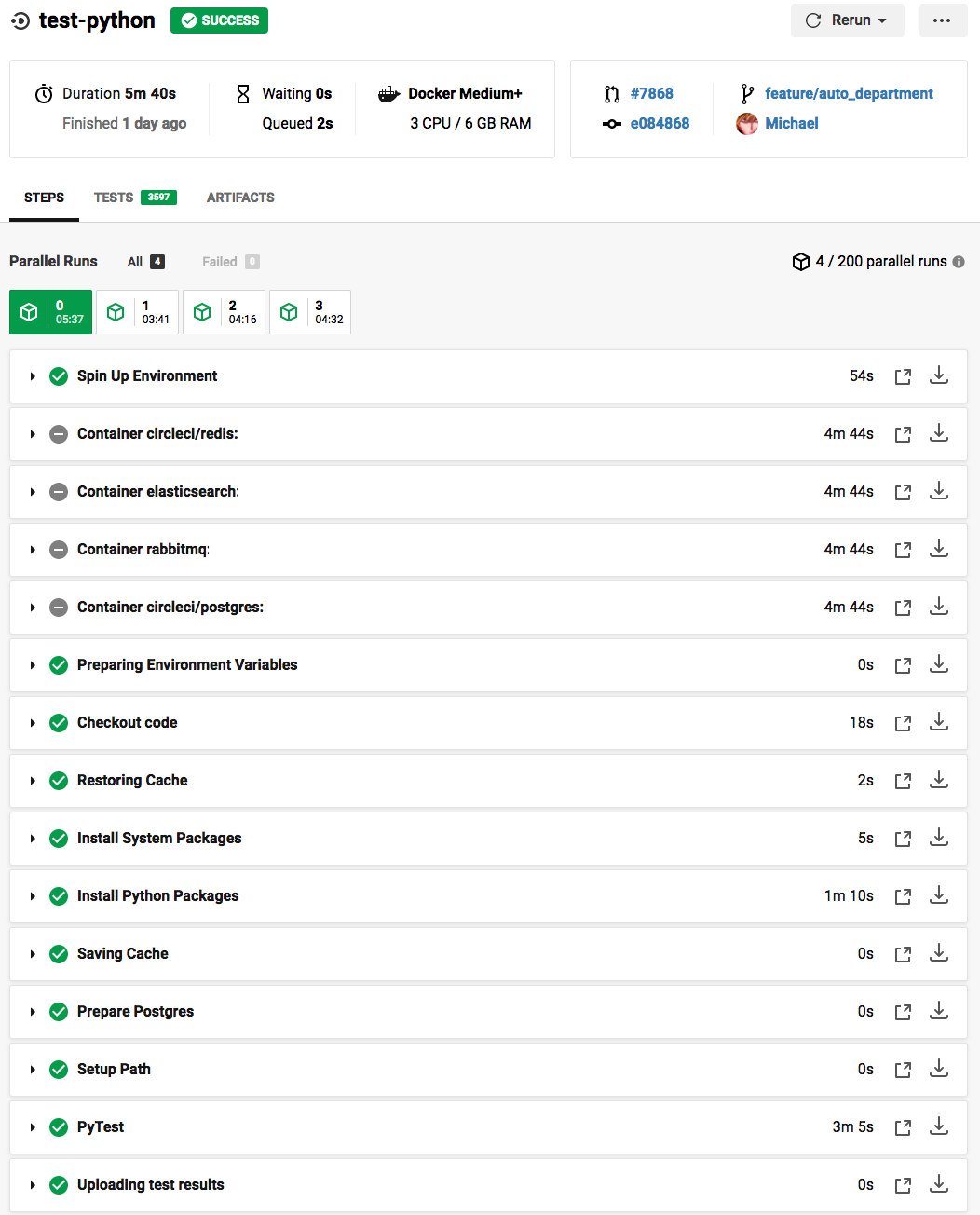

We had been chatting to the team at Circle CI, and they were convinced that they could help us get the runtime of our test suite to under 5 minutes. By using a combination of bigger instance types and test splitting we would be able to meet our goals.

Circle CI approach test splitting by partitioning the test suite, and running each partition on completely isolated environments. If you want to run with a parallelism of 4, then you get 4 instances, 4 databases, 4 redis nodes, and 4 elasticsearch instances. Each set of services is run within docker, using a familiar yaml format that most CI services have converged on.

Where Circle CI is different from other systems is their CLI tool will split your test suite for you, in a deterministic way, and distribute your test suite evenly over the number of executors you've declared. Most interestingly, is the test suite can be split by timing data so that each of your executors should have a fairly consistent run time.

And, true to their word, we were able to get our test suite run time down to under 5 minutes. As timing information changes, sometimes executors will unbalance, but that resolves itself over time.

Caveats

Test splitting comes with some downsides. In particular, we haven't figured out an easy way to aggregate 4 separate code coverage outputs into a single output. Each of the 4 coverage reports shows about 30% coverage, but aggregated would be > 80%.

It's also more expensive if that wasn't obvious. Circle CI uses a credits system for billing. Running 4 processes uses more than 4x the credits, as you're now waiting for 4 separate environments to provision before you can actually run your tests. Considering the costs of a developer waiting for 25 minutes, that cost is certainly worth it. You can also gate test runs by other quality checks first so you aren't burning credits for no reason.

The config

It wasn't as straightforward as we'd hoped to get test splitting running correctly. There weren't a tonne of resources available for splitting Django tests considering Circle had just migrated to their v2 test scripts. There was some back and forth, but we arrived at a config that worked perfectly for us. I'll share it in it's entirety, with private details removed, so that it might help others in the future.

version: 2.1

workflows:

version: 2

test:

jobs:

- test-python

jobs:

test-python:

docker:

- image: kogancom/circleci-py36-node

- image: circleci/redis

- image: elasticsearch

name: elasticsearch

- image: rabbitmq

- image: circleci/postgres

environment:

POSTGRES_USER: postgres

resource_class: medium+

parallelism: 4

steps:

- checkout

- restore_cache:

keys:

- pip-36-cache-{{ checksum "requirements/production.txt" }}-{{ checksum "requirements/develop.txt"}}

- run:

name: Install System Packages

command: |

sudo apt update

sudo apt install -y libyajl2 postgresql-client libpq-dev

- run:

name: Install Python Packages

command: pip install --user --no-warn-script-location -r requirements/develop.txt

- save_cache:

key: pip-36-cache-{{ checksum "requirements/production.txt" }}-{{ checksum "requirements/develop.txt"}}

paths:

- /home/circleci/.cache/pip

- run:

name: Prepare Postgres

command: |

psql -h localhost -p 5432 -U postgres -d template1 -c "create extension citext"

psql -h localhost -p 5432 -U postgres -d template1 -c "create extension hstore"

psql -h localhost -p 5432 -U postgres -c 'create database xxx;'

- run:

# pip install --user installs scripts to ~/.local/bin

name: Setup Path

command: echo 'export PATH=/home/circleci/.local/bin:$PATH' >> $BASH_ENV

- run:

name: PyTest

no_output_timeout: 8m

environment:

DJANGO_SETTINGS_MODULE: xxx.settings.test

TEST_NO_MIGRATE: '1'

BOTO_CONFIG: /dev/null

DATABASE_HOST: localhost

DATABASE_NAME: xxx

DATABASE_USER: postgres

DATABASE_PASSWORD: xxx

REDIS_HOST: localhost

BROKER_URL: 'pyamqp://xxx:yyy@localhost:5672//'

command: |

cd myapp

mkdir test-reports

TESTFILES=$(circleci tests glob "**/tests/**/test*.py" "**/tests.py" | circleci tests split --split-by=timings)

# coverage disabled until we can find a way to aggregate parallel cov reports

# --cov=apps --cov-report html:test-reports/coverage

pytest --rootdir="." --tb=native --durations=20 --nomigrations --create-db --log-level=ERROR -v --junitxml=test-reports/python/junit.xml --timeout=20 $TESTFILES

- store_test_results:

path: myapp/test-reportsWhat else can you do?

Running your test suite in parallel across multiple nodes is great at reducing the feedback loop for developers, but it does nothing to decrease the total runtime of your test suite. Indeed, it takes longer in absolute CPU time as N environments now need to be provisioned. To reduce your costs and get an even tighter feedback loop, you have to optimise your actual tests too.

I've covered some of those methods above, but if you're looking for real, practical advice on speeding up your tests, Adam Johnson, a Django contributor has just released a book titled Speed Up Your Django Tests.

Come work for us

And if optimising test suites to increase developer productivity and happiness is interesting to you, hit us up on our careers page! We'd love to chat.