This is part 2 of our Kubernetes hackday series. You can find Part 1 here which goes over how we spent the day and what the goals and motivations are.

For part 2, we're going to delve into the architecture required for running a Django application on Kubernetes, as well as some of the tooling we used to assist us.

This post will assume some knowledge with deploying and operating a production web application. I'm not going to spend much time going over the terminology that Kubernetes uses either. What I hope to do in this post is present enough information to kickstart your own migration. Kubernetes is big - and knowing what to research now and what to put off until later is really tricky.

I'm also going to describe this deployment in terms of Amazon EKS rather than Google GKE simply because I was on the EKS team, and most of our applications are already on AWS.

Disclaimer

We're not yet running any production apps on Kubernetes, but we've done a lot of the analysis, and are comfortable with the high level design. If you're looking to migrate yourself, feel free to use this design as a jump start, but know there'll be a lot more detail to deal with.

Current Django Architecture

Before discussing where we're going, it's helpful to know where we are. When deploying a new Django application, we usually have the following components.

- Application server(s) (Gunicorn) fronted by Nginx

- Worker server(s) (Celery)

- Scheduler server (Celery beat + shell, single instance)

- Database (Postgres)

- Cache (Redis usually, sometimes Memcache)

- Message Server for Celery (Redis or RabbitMQ)

- Static content pushed to and served from S3

- A CDN in front of the static content on S3

The scheduler server is a single instance that we use for our cron tasks, and for production engineers to ssh to when they need to do production analysis. It's incredibly important for our workflows to maintain this capability when moving to Kubernetes.

The Cluster

EKS requires you to setup the VPC and Subnets that'll be used to host the master nodes of the cluster. Thankfully, AWS provide CloudFormation templates that do exactly this.

The Getting Started section is required reading, and will walk you through the rather complicated process for setting up a cluster, authentication, the initial worker nodes, and configuring kubectl so you can operate the cluster from the command line.

This setup is one area where EKS lags well behind the GKE experience. Creating a fully operational kubernetes cluster in the GCP console is literally a few clicks.

Minikube

Minikube is a single-node local Kubernetes deployment that you can run on your laptop. If you want to experiment with Kubernetes but don't want the hassle of spinning up a production-grade cluster in the cloud, Minikube is an excellent option.

Because EKS took some time to get operational, the rest of the team members worked exclusively on Minikube until the final hour. Minikube has some limitations, but was excellent for a development environment.

Kubernetes Design

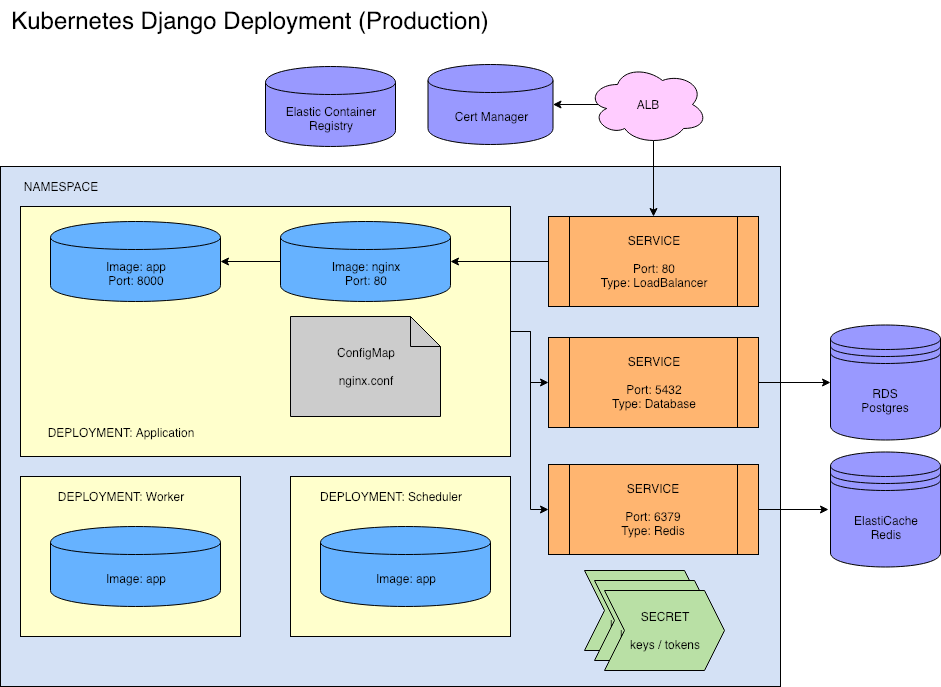

The very first task we set out to do was to come up with an architecture, in Kubernetes terms, that would support our application and our desired workflows. The final design ended up somewhat different from the initial one based on our learnings, but it gave us a shared language and allowed us to partition the tasks properly.

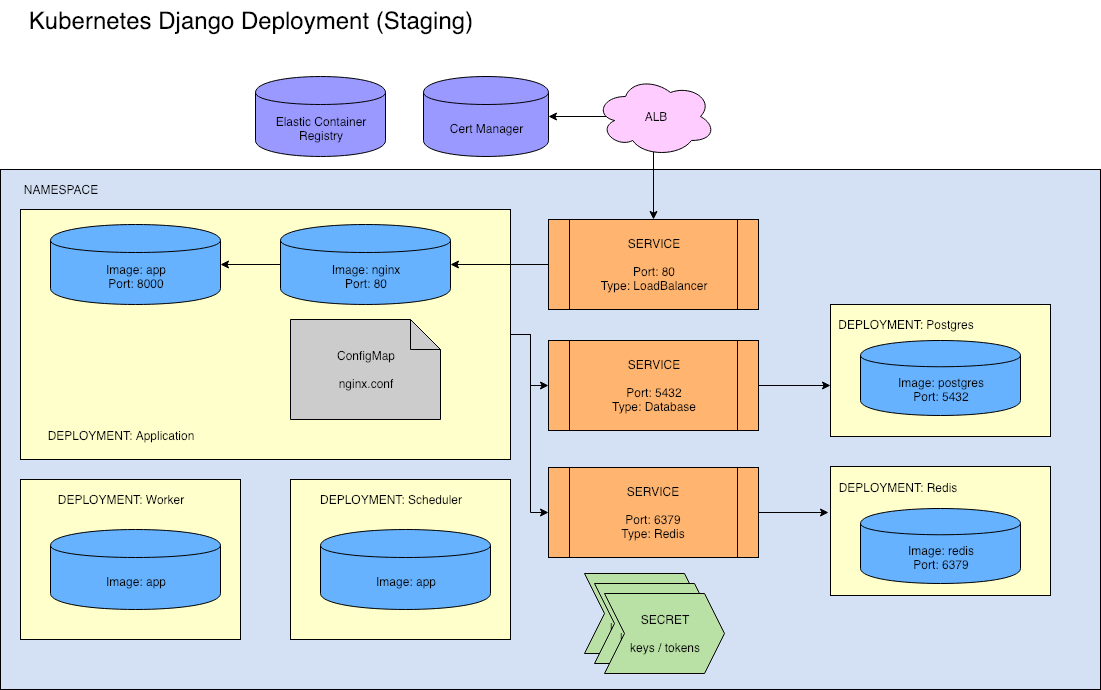

There are actually two designs. The staging architecture differs from the production architecture. We can have 10s of staging environments at any time, so being able to easily bring up and destroy environments is highly desirable.

For this reason, our staging design does not use cloud services like RDS or ElastiCache, which would require coordinating deployments between Kubernetes and various cloud provider SDKs. Instead, we deploy Postgres and Redis within the cluster.

Note: We love RDS. Properly configuring, backing up, monitoring, and updating services like Postgres and Redis isn't trivial. We trust cloud providers to do it much better than our small team can, especially on Kubernetes where our experience is, well, very little. But if our Staging database deployment crashes, it's not a critical event.

The only real difference between the two environments is that the Services either point externally to a managed data store, or internally to a datastore. The applications themselves remain blissfully unaware.

Deployments, Containers, and Services, OH MY

You may have heard of Pods or Services but don't really understand how all of these concepts fit together. The docs are dense, and knowing where to begin learning is half the battle. Let me give a quick run down of the Kubernetes objects we're using here.

Namespaces let you group together related components so they can communicate. Namespaces aren't (on their own) a security mechanism. Use namespaces to segment apps you trust, or different environments for a single app.

Deployments are like an auto-scaling group for a Pod. A Pod has (usually) a single container, but may have multiple containers if they are co-dependent. Containers within a pod share a volume and can communicate directly.

Services are basically TCP load balancers. They are capable of a lot more, but for our purposes, that's what we're using them for.

ConfigMaps can be environment variables or entire files that are injected into a Pod.

Secrets like passwords are injected into the cluster, and then made available to Deployments at runtime.

I encourage you to read all of the Concepts documentation in time. But do so after you have a handle on the above types first. There's lots you can ignore as implementation details you won't have to deal with directly.

Application Design

Unless you're already following Continuous Delivery it's likely that your application is going to need to change in some way to be compatible with a Kubernetes deployment. Here are a few things that we needed to change.

Static Files

Deploy them to S3? Bundle a copy inside each container? Use Nginx? This choice can be particularly contentious. Here's my advice.

Use Whitenoise so that Django is in charge of serving static content, then put a CDN in front of it. If, for some reason, you don't trust Whitenoise then use nginx within the same Pod to serve the files, and still put a CDN infront of it.

Shared Storage

Maybe you're using an NFS mount for uploaded media? Stop doing that. Use an object storage service (like S3) instead.

That said, you'll still need to tell Kubernetes what type of storage engine it should use for local volumes. Before Kubernetes 1.11 (EKS), you will need to explicitly configure a storage class. See Storage Classes documentation for setting this up.

Config Files

You might have config files for services like New Relic or similar. Deploy these with ConfigMaps instead.

Secrets / Config Variables

Use Secrets for sensitive material or ConfigMaps otherwise. Consume variables from environment variables within the app.

Dockerfiles

You might already have Dockerfiles for development or some other environment. My advice is to have a separate Dockerfile optimised for development, and another optimised for Production.

Perhaps you need auto-reloading and NPM in development? Great! Don't ruin your production builds and artifacts with cruft that you don't need. Reduce the size and build time aggressively for production.

In our design, we have an app server, a celery worker, and a celery beat. Use the same Dockerfile for all 3 servers, but change the Entrypoint so the right service is started. We don't want to be building 3 slightly different containers.

Deploying to Kubernetes

We have a good idea what objects we require. Our application is ready. How does it all come together?

With YAML. Specifically, having Helm Charts produce YAML.

Helm Charts are packages. You can use Helm to deploy Charts onto Kubernetes. Helm Charts represent fully running Applications like Postgres, or our own custom Django application.

Helm Charts can have dependencies. They may also have configuration, and run

time options that change how an application can be deployed. For example, you may

deploy a chart with a STAGING flag set, that additionally deploys a local Postgres

rather than relying on hosted RDS. Sound familiar?

You will spend a lot of time designing your Helm Chart yaml files. They are basically golang Templates that will render Kubernetes compatible YAML for each object in our diagram above. We hope to be able to abstract the chart enough where we can deploy arbitary Django applications based on an image name.

Monitoring and Operations

We've covered the the concepts required for setting up a cluster and deploying the application, but it's harder to find information on monitoring your application and operating it in production. I'll briefly touch on some of those facets now.

Logging

Centralised logging is critical for any large application, but doubly so for one running within Kubernetes. You can't just hop onto a node and tail log files, and doing any kind of post mortem analysis on dying nodes becomes impossible.

The standard wisdom is to run a logging daemon within each Deployment or Node that takes your logs and sends them to the logging service of your choice. There are helm charts for major logging services that you can reuse.

Logging daemons typically run as DaemonSets, which is another Kubernetes object, but one you probably won't interact with directly.

Monitoring

Prometheus is the standard monitoring tool used within Kubernetes clusters. Your application (and services) can export metrics that Prometheus will then gather.

We'll continue to use New Relic for our applications though, as it gives a lot of great insight into your Django app. Within your web/celery startup script, run it with newrelic-admin as normal. Deploy your newrelic.ini as a ConfigMap.

SSL

If you're using AWS Certificate Manager with your Application Load Balancer, then SSL is mostly handled for you. There are also Helm charts for deploying LetsEncrypt into your Kubernetes cluster.

DNS

This one is a bit trickier, and I'm not sure we've fully landed on a solution at this time. Amazon Route 53 is the managed DNS service, but what is going to provision a new zone for an instance of our application?

There are tools like Pulumi that allow you to program your infrastructure with nice Kubernetes integration. It may make sense to have Pulumi provision the hosted zone, and then deploy your helm chart into Kubernetes.

Auto Scaling

There are two systems that you're going to need to autoscale now. The nodes that the cluster is running on, and the number of application instances (Pods/Deployments) you want to run.

Helm cluster-autoscaler claims to support scaling the worker nodes. While Kubernetes has support for Horizontal Pod Autoscaler for scaling your application.

Summary

I hope the information presented above is useful and answers some questions you might have already had about moving to Kubernetes. We found that working our way through and understanding the concepts behind Kubernetes has lead to a lot more productive conversations about what a migration might look like, and the effort required to make that migration. A full migration is definitely on the cards for this year, and we'll be documenting our journey as we go.

If there are any corrections needed above, please let us know in the comments. We'd also love to hear about your experiences with your own migration and what some of the sticking points were.

And, as always, if infrastructure is your thing and you think you can help us with the migration to Kubernetes, We're Hiring!